SoundSeeker is a fascinating project as it brings together photos and music, art forms that have theoretical underpinnings that can be indexed and understood in isolation by machine learning, however the combination of a photo and music for a pleasing result is an emotional and subjective process. Working with a great team made designing the music system a great collaborative experience balancing science and art.

The background

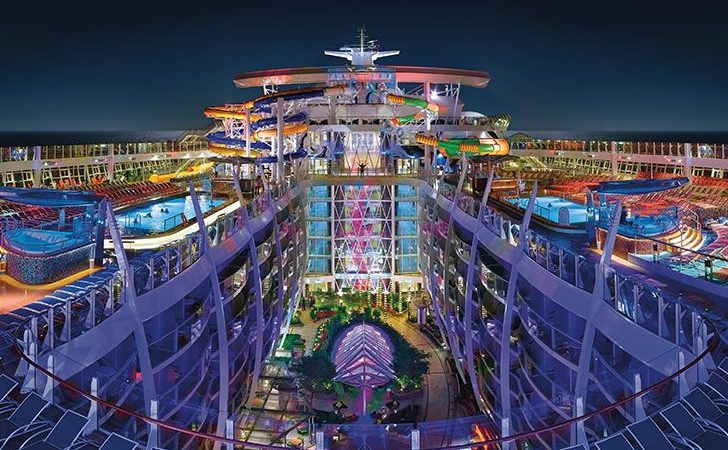

If you’ve ever been on a Royal Caribbean cruise, you know it’s so much more than a “cruise.” It’s an adventure—and not one you’re likely to forget. The brand wanted a digital activation that brought the brand ethos to life and got potential cruisers excited to climb on board.

The execution

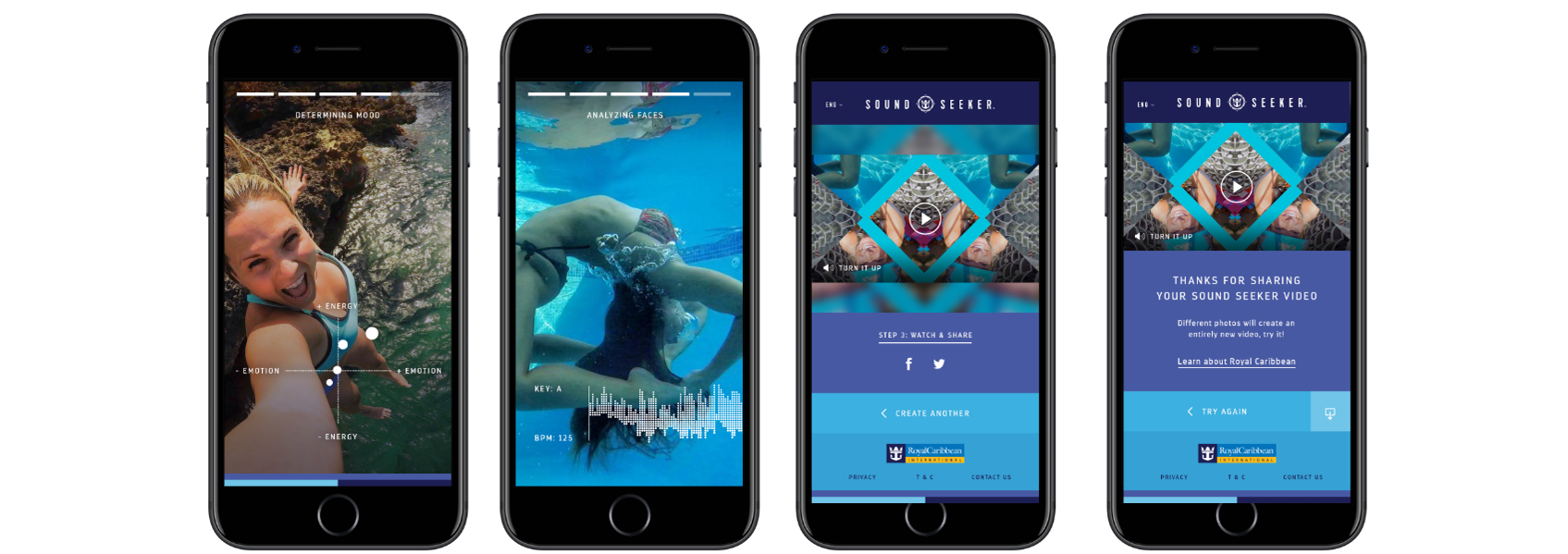

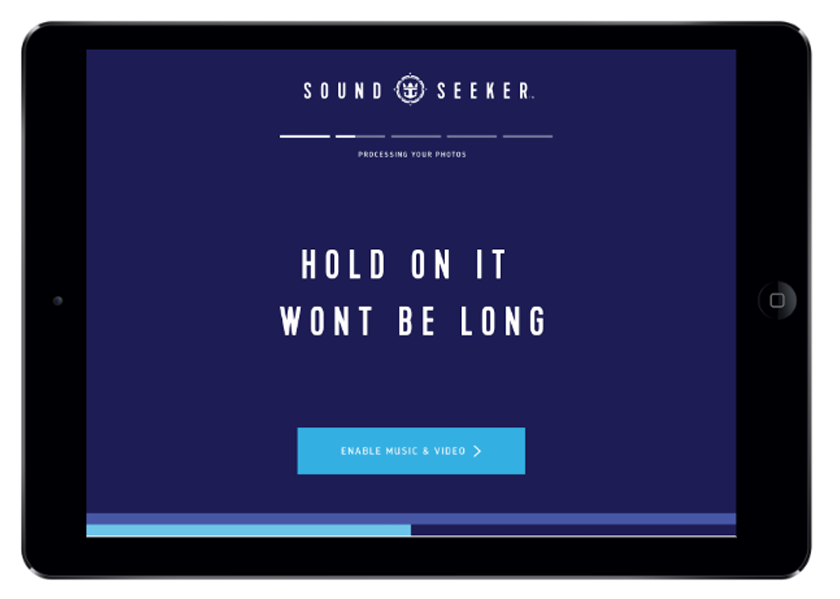

SoundSeeker is an AI-driven experience that analyzes the emotions in users’ vacation photos and creates ultra-shareable videos from them set to custom soundtrack. As humans, we often hear a piece of music, and it surfaces a memory or emotion. But SoundSeeker flips that notion on its head—it takes emotions and memories and gives them the music that will make them even more powerful.

We had to design, develop and train a custom Machine Learning algorithm to generate music that matches pretty much any possible image in terms of its overall mood and content. This kind of understanding can be extremely subjective and very “human” in nature—something that’s very difficult for Machine Learning models to grasp. We approached the problem by first focusing on gathering data.

Our custom training tool allowed tastemakers to assign music to images based on their personal taste. From there, we analysed that music and evaluated all the images with Google Cloud Vision. Information about the content of those images as well as raw pixels and the extracted musical features were then fed to our Machine Learning model to learn from. But the result wasn’t just a music mix set to images; it was a bespoke audio and video animation, that included user-uploaded images, animating in sync with the AI-generated music. To achieve this stunning end product, we developed a custom server-side rendering pipeline based on After Effects templates, but used only open source technology for the actual rendering. The pipeline allowed us to specify variables and to feed values dynamically for each render, resulting in unique alterations of the base templates. The infrastructure we developed was capable of rendering out 30-second long 960×540 videos within 15 seconds.

The results

Music theory, focused composition, machine learning, animation and design all worked together to create fully modular system in a way that delighted users again and again. Check it out here.

We managed to achieve something pretty rare in Machine Learning – a system that has been trained to generate creative output that sounds and looks pleasing to human’s subjective taste.

Credits

-

Division

-

Director

-

Agency

-

Brand

-

Technology

-

Platform

-

Kind

-

Industry

-

Release Date

2018-06-19